Are AI Agents the New Microservices?

Introduction

Back in my school days, the internet was still young, Yahoo was in its infancy, and websites were few. Our learning resources were limited to books like The C Programming Language by Kernighan and Ritchie, The Design of the UNIX Operating System by Bach, and Advanced Programming in the UNIX Environment by Richard Stevens. Beyond that, we relied heavily on the man command (short for "manual") to learn tools like vi, which remained my preferred editor for years. If someone asked a basic question, we’d respond with “RTFM” (Read The Fine Manual).

Times changed, and "RTFM" evolved into Let Me Google That For You (LMGTFY) for those who preferred asking rather than searching. Now, with Open AI’s Generative Pre-trained Transformer (GPT) models and other Large Language Models (LLMs), we've entered the Let Me Prompt That For You (LMPTFY) era, where AI understands natural language and generates answers faster than ever.

The AI race today mirrors the dot-com boom, companies and individuals alike are scrambling to stake their claim, fearing they’ll miss out on the next big breakthrough. In the late '90s, businesses rushed to build anything with “.com” in their name, just as today’s companies are racing to integrate AI Agents and Model Context Protocol (MCP) clients/servers into their products and services.

Much like how microservices reshaped software architecture, AI Agents are redefining how we build intelligent systems. Professionals now feel the pressure to upskill in AI, much like software engineers of the dot-com era who had to transition from C to Java.

In this post, I’ll explore the parallels between Microservices and AI Agents, breaking down how LLMs, AI Agents, MCP Clients/Servers, and AI tools fit into this evolving architecture.

Microservice Revolution

Microservice architectures started gaining popularity in the early 2010s, alongside the rise of cloud computing. Netflix was a pioneer in this space, breaking apart its monolithic architecture and deploying services in the AWS cloud. While Netflix led the microservices movement, the foundation was laid earlier by Jeff Bezos in his now-famous 2002 email, often referred to as the API Mandate. In it, he required all Amazon teams to communicate strictly through service interfaces, effectively enforcing an early version of microservices. This philosophy later shaped AWS services and became a cornerstone of modern microservices best practices.

With the rise of containers and Kubernetes, companies like Uber and Spotify embraced microservices to enable independent teams, accelerate development cycles, and scale more effectively. However, Uber’s architecture became so fragmented with tons of microservices that they later consolidated them into macroservices, larger service units that reduced complexity while maintaining modularity.

Microservices transformed software development, but they remain divisive—some engineers love the flexibility, while others struggle with the complexity they introduce. From my experience, microservices are like pineapple on pizza, you either love them or hate them.

Rise of the AI Agents

While there is no universally accepted standard for LLM APIs, OpenAI’s APIs have become the de facto choice for many developers today. These APIs can be easily integrated into microservices when needed. Microservices are excellent for building modular, scalable applications, but they operate within strict, predefined APIs and a rigid request-response model. For instance, a flight reservation system might fail without adaptive recovery if unexpected scenarios arise. They lack autonomy, decision-making, and contextual adaptation, some of the key traits necessary for handling complex and unstructured tasks.

This is where AI Agents bridge the gap. Unlike traditional microservices, AI Agents bring autonomy, contextual adaptation, and goal-driven execution. They don’t just respond to requests; they can make decisions, adjust dynamically, and handle unstructured tasks, making them a natural evolution beyond microservices.

AI Agents: Beyond Traditional Automation

AI Agents go beyond traditional automation, as they don’t just execute predefined APIs like microservices do; they reason, plan, and act autonomously to manage complex, end-to-end workflows. They ingest data, interact with APIs or other agents, and continuously refine their behavior over time. AI Agents rely on LLMs, Retrieval-Augmented Generation (RAG), and memory to provide context and improve decision-making.

Key Characteristics of AI Agents:

Autonomous: Operates independently with minimal human input.

Adaptive: Learns and evolves based on feedback.

Goal-Oriented: Uses reasoning to achieve specific tasks.

Multi-Step Planning: Decomposes complex objectives into manageable steps.

Tools

Tools in AI agents are like specialized instruments in a surgeon's kit. They are accessed via APIs or plugins, enabling agents to extend their capabilities beyond pure language processing. Agents strategically select and use tools based on the task at hand, like using a search engine API for information retrieval, accessing local files through filesystem wrapped APIs, or a code interpreter for executing scripts. These tools allow agents to interact with the real world and perform actions that would otherwise be impossible.

Model Context Protocol : The Missing Link?

Using tools directly from LLMs or AI Agents leads to inconsistent communication, lack of context across calls, limited orchestration, security risks, and vendor lock-in. To act intelligently, agents must persist and retrieve knowledge dynamically. Anthropic introduced MCP as an open standard that fixes these issues by allowing AI Agents to have access to context, memory, and tool execution capabilities. An MCP Server solves this by standardizing interactions, maintaining session context, enforcing security, and enabling flexible tool orchestration, making AI-driven systems more scalable and adaptable.

MCP Client: The Bridge Between Agent and Context

The MCP Client serves as a bridge between AI Agents and the MCP Server, enabling seamless communication. It forwards agent queries, sends requests for context retrieval and reasoning, and ensures that AI Agents can interact efficiently with the MCP Server without needing direct access to external services.

MCP Server: Agent’s Knowledge Hub

The MCP Server acts as a centralized memory and processing unit, storing past interactions and retrieving relevant context when needed. It structures responses for AI Agents and facilitates connections with external services, allowing agents to make informed, context-aware decisions dynamically.

MCP servers store knowledge, user history, and evolving context. Just as microservices fetch data from databases, AI Agents fetch context from MCP servers. For example, in customer support, an AI agent retrieving past conversations from an MCP server creates a seamless experience, just like a microservice fetching user data from a database.

For those interested in building MCP servers, the Model Context Protocol (MCP) Server Development Guide offers a comprehensive walkthrough on the topic.

Bringing It All Together: The New Distributed Architecture

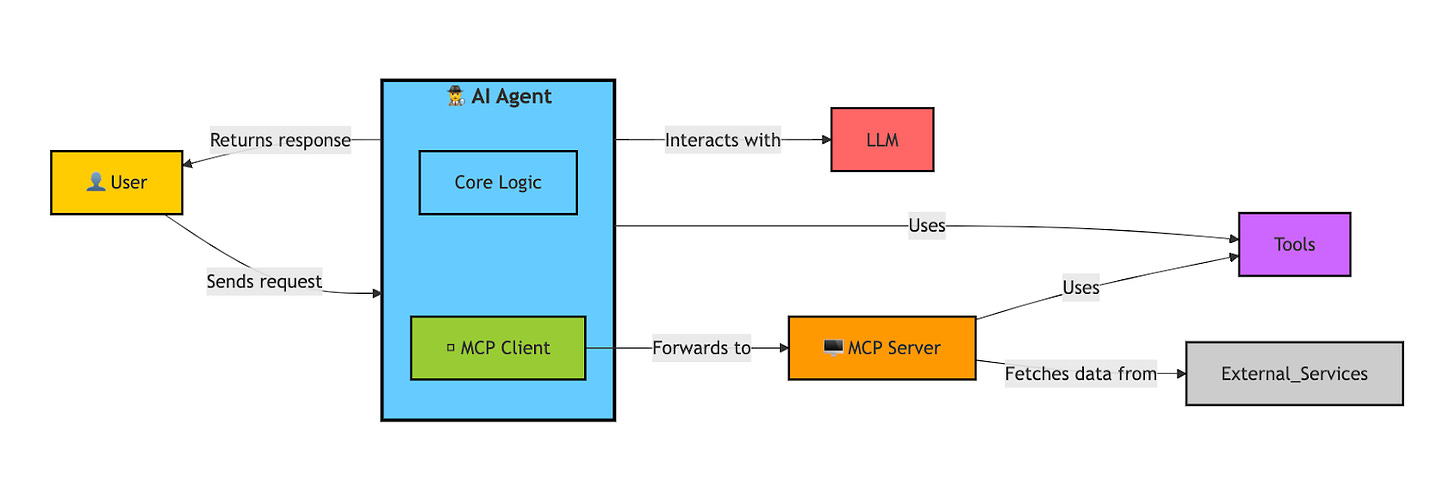

This diagram below illustrates the architecture of an AI Agent, highlighting its interactions with various components. The User sends a request, which the AI Agent processes using its Core Logic. It may interact with an LLM for reasoning, utilize Tools for specialized tasks, or forward requests via the MCP Client to the MCP Server, which can fetch data from external services or databases. By leveraging these components, the AI Agent enables adaptive decision-making, multi-step workflows, and real-world interactions, making it more capable than traditional automation.

Scaling Beyond a Single Agent

While the previous diagram works well for smaller systems, real-world applications typically involve multiple AI Agents and MCP Servers. In such cases, the MCP Client can be decoupled and act as an orchestrator, allowing it to support multiple agents and servers simultaneously.

To achieve this, the orchestrator leverages:

A local context cache to store frequently accessed data.

A context router to intelligently route agent requests to the appropriate MCP Server.

This architecture enhances scalability, efficiency, and fault tolerance, ensuring AI Agents operate seamlessly across distributed environments.

Conclusion

I have fond memories of building TCP/UDP clients and servers on Unix and thoroughly enjoyed developing client/server applications. I remember using Sun Microsystems’ rpcgen to generate RPC client and server stubs for networked applications back in the mid-'90s. So, I was excited when Anthropic introduced the Model Context Protocol (MCP) in 2024. Initially, I was skeptical, especially since the specification provides minimal guidance on authentication and authorization. MCP implementations are free to choose their authentication (authn) and authorization (authz) mechanisms, whether API Keys, JWT, or OAuth. However, an MCP Server can connect to any tool or external service, enforcing its own authentication and authorization policies for accessing remote databases and APIs.

Looking back, networked applications used a portmapper to help clients discover server ports dynamically. Today, an MCP Client does not inherently know about all available MCP Servers, requiring either static configuration or service discovery. In past projects, we had to refactor monoliths into multiple microservices for scalability. I hope we apply those lessons here and avoid designing monolithic MCP servers that attempt to be a Swiss Army knife.

The pace at which MCP servers are emerging is staggering. While the early signs are promising, only time will tell whether MCP adoption reaches the ubiquity of HTTP. For now, engineers would be wise to stay ahead of the curve, experiment with AI agents, and explore MCP’s role in future architectures.

I’ve barely scratched the surface of this topic. Writing this helped me clarify my own understanding of AI Agents, their components, and how they interact within modern architectures. There’s much more to explore, and I look forward to seeing how this ecosystem evolves.