The Age of Agentic AI: Building a Connected Assistant

Turning AI from reactive tools into proactive collaborators

Introduction

Last year, during my first month in a new role, I proposed an AI Agent framework to empower developers. That proposal didn’t secure funding. At the time, several open-source agent frameworks already existed: LangChain, LangGraph, Crew.ai, and others. I experimented with a few frameworks and each had strengths and weaknesses. Six months later, Strands Agents, an open-source AI Agents SDK, was launched.

So, why build agents with Strands or any of the other frameworks? The AI landscape is shifting. Users no longer want to simply copy and paste responses from Large Language Models (LLMs). The next step is Agentic AI products and services that improve customer experience, boost employee productivity, and drive innovation.

AI Agents & Agentic AI

Before diving into why agents matter, let’s define some terms.

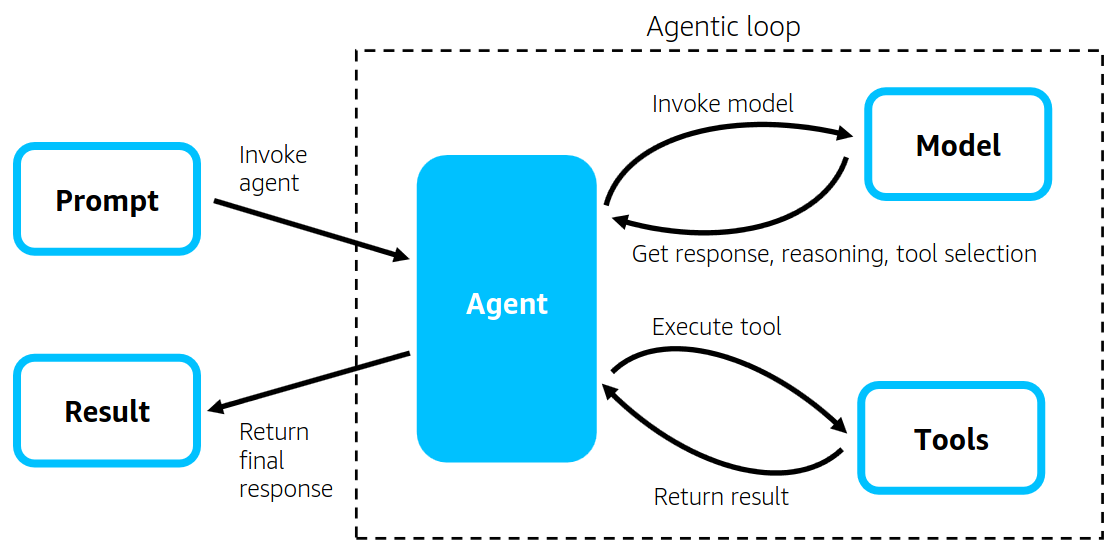

AI Agents are like microservices for AI. They’re self-contained software entities built on top of LLMs. An agent takes a goal as input, creates a plan, calls tools or APIs as needed, and adapts based on the results.

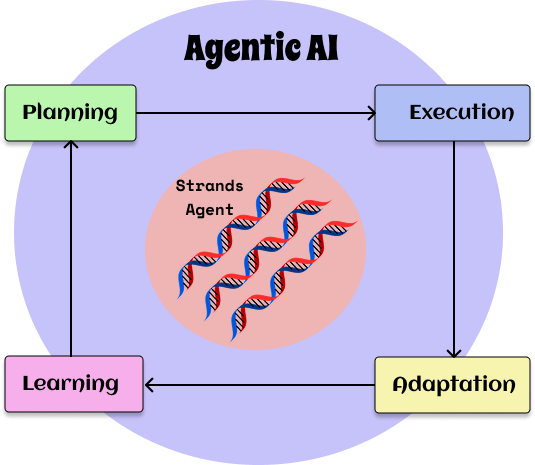

Agentic AI is the broader concept. It’s the paradigm that allows AI systems to operate in an autonomous, goal-driven, multi-step way, whether as a single agent, a swarm of agents, or agents embedded within larger systems.

In short: Agentic AI is the concept; AI Agents are one way to implement it.

Source: Introducing Strands Agents

AI agents shine when a task is not a single command but a dynamic, multi-step workflow. For example, an agent might:

Orchestrate a series of tool calls.

Pull context from a database.

Draft a summary for the user.

Traditionally, this would require multiple prompts and manual data transfers. Agents can adapt dynamically, bringing in relevant information automatically, making them invaluable for personalized interactions.

Enterprise AI Assistant

Think of “AI mode” in Google Search. It goes beyond returning a list of links. Instead, it processes and synthesizes information into a direct, conversational answer.

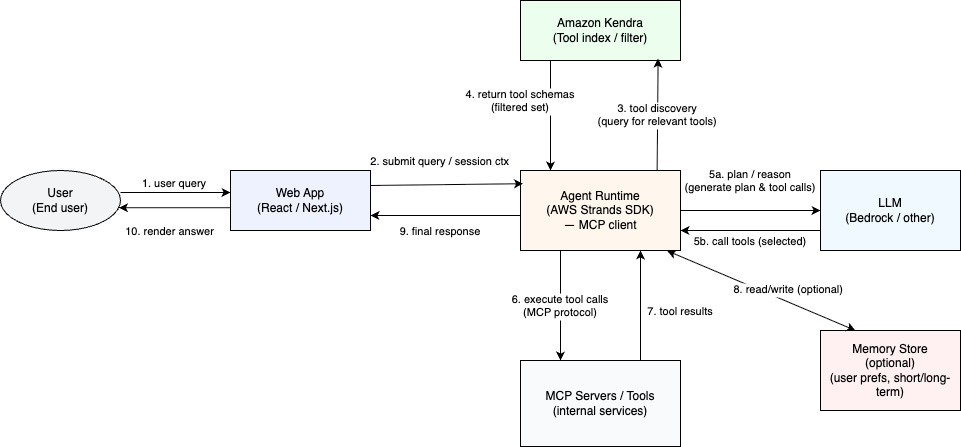

I wanted to bring that experience inside the enterprise. My goal was to create an MCP-powered AI agent that didn’t just find facts in internal documentation, it would also help manage complex, context-rich workflows.

The agent’s job is simple to describe:

Gather the right information for the user’s goal.

Create a plan.

Break the work into manageable sub-tasks.

Act or Execute

Adapt and Learn based on the results.

While tools like Amazon Q CLI already exist, none offer a web interface that’s integrated with MCP and works equally well for developers and non-developers. I built a proof-of-concept with the Amazon Strands SDK.

Because it’s a single-agent solution, it leans heavily on MCP servers. Most MCP servers are large monoliths filled with tools. While this makes distribution easier, it clutters the context window with irrelevant tool descriptions.

The fix: select only the tools relevant to each query, dynamically.

By narrowing the scope and using reasoning methods like ReAct, the assistant executes workflows with precision. Every action is grounded in verified internal context, avoiding hallucinations. Whether generating an on-call report, surfacing critical tickets, or drafting a pull request, it uses trusted data sources to deliver accurate, actionable results.

When Agents Are Overkill

Agents aren’t a silver bullet. They can be over-engineered for simple, one-shot Q&A tasks or fixed workflows where the logic never changes.

AI Agents are generally non-deterministic, the same input can yield different outputs depending on context, prompt wording, or even randomness in the model. For example, when people asked GPT-5 who the current US president was, some got “Kamala Harris” while others saw “Donald Trump.” The answer varied based on timing, phrasing, and context.

This adaptability is powerful for reasoning but risky when you need consistent results. For deterministic workflows, where the same input must always give the same output, Robotic Process Automation (RPA) is a better fit. RPA follows exact rules and produces identical results every time. Running prewritten unit tests and sending a report to a dev team is a good example.

A useful rule of thumb:

Use agents when a task needs multiple tools, depends on intermediate results, and changes with context.

Use scripts, APIs, or RPA when the task is fixed and predictable.

Adaptive bug triaging and resolution planning is a great example where an AI Agent is the right choice.

Closing – The Path Forward

The true revolution of Agentic AI isn’t about chasing the “smartest” model. It’s about making models better connectors to the systems that matter most to your business.

The AWS Strands + MCP + Knowledge base architecture provides a solid starting point. It’s best to begin with a small set of tools focused on a specific use case, then test the agent’s capabilities and expand gradually as you see positive results. From there, you can enhance the system by adding features like memory persistence, allowing agents to learn from past interactions, integrating Bedrock for more flexible model choices based on the task, and incorporating user profiles to enable more personalized tool selection.

Authentication in MCP is still evolving, so starting with a single-agent use case makes sense. Multi-agent solutions will grow in importance, but agent-to-agent communication is still early-stage. Be ready to refactor or even replace early versions as standards emerge.

In many ways, the agentic loop mirrors the OODA loop, Observe, Orient, Decide, Act, a framework from strategy and decision-making. Both emphasize continuous learning, rapid adaptation, and refining actions based on new information, making them well-suited for navigating complex, changing environments.

While many are busy dissecting the just-released GPT-5, I’m focusing on AI Agents and Agentic AI. These may seem like “yesterday’s news” in the fast-moving AI world, but they are foundational for what’s next. My approach is simple: build, explore, make mistakes, and learn, an ongoing agentic loop. For me, progress is not measured by speed, but by direction. And the direction is clear: build one agent at a time, as needed.